A Workforce Strategy for the Age of AI: Why the future of work isn't about replacement—it's about a great reshuffle

While CEOs invest in AI, employee anxiety creates friction. Shifting from a "Replacement" era to a "Reshuffle," Srini Koushik argues that AI unbundles skills from roles rather than making humans obsolete. Leaders must move beyond automation to integrate cognitive thinking partners.

By Srini Koushik

There is a growing paradox in the modern enterprise. CEOs are allocating massive capital to AI transformation, yet inside the organization, the conversation is dominated by anxiety. For most employees, AI remains a "Black Box"—or worse, a looming threat to their livelihood.

This fear is creating friction. It leads to slow adoption, shadow IT, and a culture of defensiveness.

But the fear is based on a fundamental misunderstanding. We are not facing a "Replacement" era; we are facing a Reshuffle. As Sangeet Paul Choudary argues in his book Reshuffle, AI doesn't just automate tasks; it "unbundles" skills from roles. This unbundling doesn't make humans obsolete—it clarifies where human value truly resides.

To navigate this, leaders must stop looking at AI as a singular automation tool. We need to stop "installing" algorithms and start integrating Cognitive Thinking Partners.

The Great Reshuffle: Wisdom meets Energy

The organizations that win in this new era won't be the ones that cut the most heads. They will be the ones that use AI to bridge the two most valuable assets in their workforce.

As AI commoditizes execution, value migrates to two specific human bottlenecks:

- The Wisdom of the Experienced (Judgment): For decades, we paid senior people to execute tasks. That was a waste of their talent. With AI handling execution, the value of the veteran shifts to Judgement and Curation. They become the "verifiers"—the guardrails that turn raw intelligence into safe business value. Their deep institutional memory is no longer a bottleneck; it is the quality filter for the AI's output.

- The Energy of the New (Curiosity): The emerging workforce—like the students I teach at Ohio State—brings a different asset. They are unburdened by "how we've always done it." Their value is Question Framing. Because they are digital natives, they can use AI to iterate through ideas at a velocity traditional workflow cannot match. They don't use AI to avoid work; they use it to explore the solution space faster.

AI Fluency is the bridge between these two groups. It allows the veteran to scale their wisdom and the new entrant to accelerate their impact.

Designing the Cognitive Partnership: System 1 and System 2

To integrate these partners effectively, we must look at the cognitive architecture of the team. We can apply Daniel Kahneman’s framework from Thinking, Fast and Slow to define exactly how humans and AI should interact. The goal is to use the Cognitive Thinking Partner to compensate for human cognitive limits, and vice versa.

- AI as System 2 (The Guardrail): Humans are prone to System 1 errors—we get tired, we rely on heuristics, and we have "bad days." In high-stakes roles like Compliance, Legal Review, or Cybersecurity, the AI acts as our System 2. It is the slow, deliberate, logical check that never suffers from fatigue. It forces the human to pause and review the evidence, preventing costly errors born of intuition or speed.

- AI as System 1 (The Generator): Conversely, in creative or divergent phases, the AI acts as a hyper-fast System 1. It can generate fifty headlines, ten architectural variations, or five strategic scenarios in seconds. Here, the human must engage their System 2 to deliberate, curate, and verify that output.

This bi-directional flow—where the Cognitive Partner sometimes speeds us up (System 1) and sometimes slows us down (System 2)—is the hallmark of a mature AI strategy.

The Governance Framework: Embedding the Partner

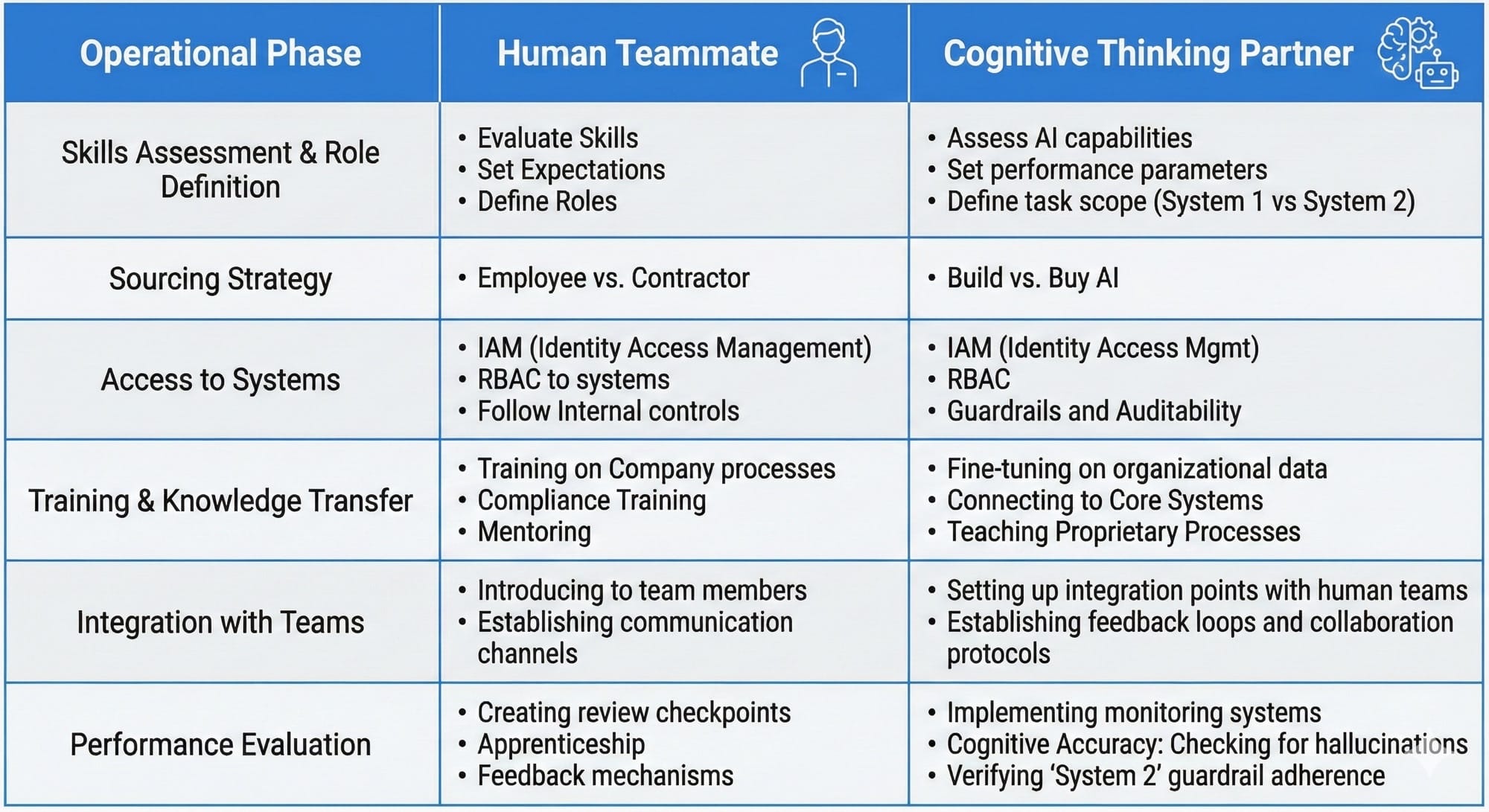

To make this partnership work, we have to change our management philosophy. Embedding AI agents must be done with the same rigor as adding new team members.

We must elevate AI from a cost center to a verifiable, accountable resource. The following framework outlines how we apply human HR discipline to our Cognitive Partners:

The Background Check (Data Ethics)

When we hire a human, we verify their history and assess inherent risk. We must do the same for AI.

This is the Data Background Check. Just as you wouldn't partner with a candidate educated solely on unverified conspiracy theories, you cannot engage a model trained on biased, proprietary, or outdated scrapings of the open internet.

- The Ethical Mandate: This is where we apply the "Base Rate Inquiry". Was the foundational model trained on data that aligns with our ethics?

- The Risk Assessment: We must identify limitations and potential hallucinations before integration. Bad data isn't just a technical glitch; it’s a failure of integrity.

Role Definition: Eliminating Muda

In the Toyota Production System, we relentlessly focus on eliminating Muda (waste). We engage Cognitive Partners to attack two specific types of waste:

- The Substitution Role (The Toil Killer): This role automates repetitive, low variance work—the "toil" that causes human burnout. The AI acts as a 24/7 Incident Manager or Data Analyst. The goal isn't to replace the human; it's to replace the drudgery, freeing the human to focus on complex system design.

- The Augmentation Role (The Expert on Demand): This role injects specialized knowledge into high-context work. The AI acts as a Practitioner-Coach or a Compliance Assistant, providing real-time, compliance-checked guidance. It ensures every decision aligns with regulatory requirements, scaling expertise instantly.

The Dual Curriculum (Onboarding)

You wouldn't hire a brilliant graduate and expect them to know your company's acronyms on Day 1. The same applies to AI. We face a dual fluency challenge:

- Training the AI (From LLM to LOM): The AI must learn your organization's DNA. By fine-tuning models on your internal data and SOPs, we move from a generic Large Language Model to a specific Large Organizational Model (LOM).

- Training the Humans: This is harder. We must teach employees to be managers of intelligence, not just consumers of it. They need to learn how to define roles, set expectations, and provide structured feedback to their synthetic partners.

Leadership in the Loop: Countering "Algorithm Awe" (The New HiPPO)

In traditional management, we often fight the HiPPO effect—where teams blindly defer to the Highest Paid Person's Opinion.

With AI, we face a digital variant of this risk: Algorithm Awe. This is the tendency to over-trust output simply because it appears authoritative, coherent, and comes from a "smart" machine.

The risk isn't just that the AI will go rogue; it's that the human team will fall asleep at the wheel. As leaders, we must enforce Cognitive Security Protocols:

- Steelman the Argument: I encourage my Cognitive Partners (and my human teams) to act as co-creators. I want the AI to challenge my assumptions. I want it to present the strongest version of the opposing view before we finalize a decision.

- The "Pushback" Principle: The AI’s output is data-informed input, not a final verdict. The human leader’s job is to apply the "Outside View" and critical scrutiny.

The Legacy of AI Fluency

Ultimately, this strategy is about more than efficiency. It is about returning to a human-centric workplace.

When we strip away the toil, the data entry, and the repetitive grinding, we are left with the things that make us human: empathy, adaptability, critical thinking, and creative problem-solving.

By treating AI with the rigor of a true partner, we mitigate the risks of bias and hallucination. But more importantly, we free our human capital to focus on the divergent challenges that make work fun and fulfilling. We aren't building a workforce of machines. We are building a workforce of better humans, supported by the most disciplined Cognitive Thinking Partners we have ever hired.

Srini Koushik is the CEO and Founder of Right Brain Labs, an AI Innovation Lab and CxO Advisory based in Columbus. An AI Top 50 Thinker and inductee into the CIO and CTO Hall of Fame, he leverages over 35 years of leadership experience across startups and Fortune 100s to balance strategic vision with hands-on execution. Find out more at www.rightbrainlabs.ai